Introduction¶

The High Throughput Computing (HTC) service on the SCIGNE plateform is based on a computing cluster optimised for processing data-parallel tasks. This cluster is connected to a larger infrastructure, the EGI European Grid Infrastructure.

Although the recommended way to interact with the HTC service is to use the DIRAC software, it is also possible to use directly the Computing Element (CE) of the SCIGNE platform for managing your jobs. The CE software installed at SCIGNE is ARC CE.

After a brief introduction to how a computing grid works, this documentation explains how to submit, monitor and retrieve the results of your calculations with the ARC CE client.

The Computing Grid¶

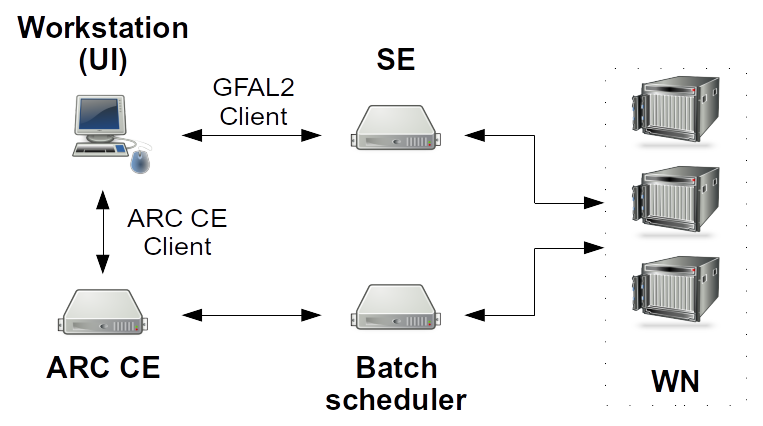

This section presents the different services involved in submitting a job on the computing grid. The interaction between these services during a job’s worklow is illustrated in the figure below. The acronyms are detailed in the table The main services of the computing grid.

Job workflow on the grid infrastructure¶

Users manage computing jobs with DIRAC. Before submitting a job, large data files (> 10 MB) must be copied to the Storage Element (SE). How the SE works is detailed in the documentation about grid storage. These data will be then accessible from the compute nodes.

Once the data is available, the job can be be submitted to the DIRAC service, which selects the site(s) where the computation will run. DIRAC makes this selection based on site availability and and the calculation prerequisites specified by the user. After selecting a site, DIRAC submits the job to the Computing Element (CE). The CE distributes the jobs to the Worker Nodes (WN). When the computation is complete and the results copied to a SE, the CE collects the execution information and sends it back to DIRAC.

While the computation is in progress, the user can query DIRAC to monitor its status.

Element |

Role |

|---|---|

UI |

A UI (User Interface) is a workstation or server where DIRAC is installed and configured. It enabled users to:

|

SE |

The SE (Storage Element) is the component of the grid that manages data storage. It is used to store input data needed by jobs and to retrieve the results they produce. The SE is accessible via various transfer protocols. |

ARC CE |

The ARC CE (Computing Element) is the server that interacts directly with the queue manager of the computing cluster. It permits to submit jobs to the local cluster. |

WN |

The WN (Worker Node) is the server that executes the job. It connects to the SE to retrieve the input data required for the job and can also copy the results back to the SE once the job is complete. |

Prerequisites¶

In order to submit a job to an ARC CE, the following two prerequisites must be met:

Have a workstation with the ARC CE client installed.

Have a valid certificate registered with a Virtual Organisation (VO). For more information, see the document Managing a certificate.

ARC CE Client¶

Jobs are managed using the ARC CE client, which is a command line interface software.

If you have an account at IPHC, you can simply use the UI server, as the ARC CE client is already installed there.

It is also possible to simply install the ARC CE client on your GNU/Linux workstation:

With Ubuntu:

$ sudo apt install nordugrid-arc-clientWith RedHat and its derivatives (i.e. AlmaLinux):

$ dnf install -y epel-release $ dnf update $ dnf install -y install nordugrid-arc-client

Certificate¶

The private and public parts of the certificate must be placed in the

${HOME}/.globus directory on the server from which jobs will be

submitted. These files must have read permissions restricted to the

owner only:

$ ls -l $HOME/.globus

-r-------- 1 user group 1935 Feb 16 2025 usercert.pem

-r-------- 1 user group 1920 Feb 16 2025 userkey.pem

In this documentation, we use the VO vo.scigne.fr, which is the

regional VO. You should replace this VO name with the one you are using

(e.g., biomed).

Job Management with the ARC CE Client¶

Proxy generation¶

Before submitting a job to the computing grid, it is required to generate a valid proxy. This temporary certificate allows you to authenticate with all grid services. It is generated with the following command:

$ arcproxy -S vo.scigne.fr -c validityPeriod=24h -c vomsACvalidityPeriod=24

The -S option allows to select the VO.

The following command shows the validity of your proxy:

$ arcproxy -I

Subject: /DC=org/DC=terena/DC=tcs/C=FR/O=Centre national de la recherche scientifique/CN=Your Name/CN=265213680

Issuer: /DC=org/DC=terena/DC=tcs/C=FR/O=Centre national de la recherche scientifique/CN=Your Name

Identity: /DC=org/DC=terena/DC=tcs/C=FR/O=Centre national de la recherche scientifique/CN=Your Name

Time left for proxy: 23 hours 59 minutes 48 seconds

Proxy path: /tmp/x509up_u1234

Proxy type: X.509 Proxy Certificate Profile RFC compliant impersonation proxy - RFC inheritAll proxy

Proxy key length: 2048

Proxy signature: sha384

====== AC extension information for VO vo.scigne.fr ======

VO : vo.scigne.fr

subject : /DC=org/DC=terena/DC=tcs/C=FR/O=Centre national de la recherche scientifique/CN=Your Name

issuer : /DC=org/DC=terena/DC=tcs/C=FR/L=Paris/O=Centre national de la recherche scientifique/CN=voms-scigne.ijclab.in2p3.fr

uri : voms-scigne.ijclab.in2p3.fr:443

attribute : /vo.scigne.fr

Time left for AC: 11 hours 59 minutes 52 seconds

Job submission¶

The submission of a job with the ARC CE client requires a text file containing instructions in xRSL format. This file specifies the characteristics of the job and its requirements.

The xRSL file presented in the example below is fully functional and

can be used to realise a simple job. The xRSL file is named

myjob.xrsl in this document.

&

(executable = "/bin/bash")

(arguments = "myscript.sh")

(jobName="mysimplejob")

(inputFiles = ("myscript.sh" "") )

(stdout = "stdout")

(stderr = "stderr")

(gmlog="simplejob.log")

(wallTime="240")

(runTimeEnvironment="ENV/PROXY")

(count="1")

(countpernode="1")

Each attribute of this example has a specific role:

executable defines the command to be executed on the compute nodes. It can be the name of a shell script.

arguments specifies the arguments to pass to the program defined by the executable attribute; in this example

myscript.shis a bash script that will be passed as an argument to the bash command.jobName defines the name of the job.

inputFiles indicates the files to send to the ARC CE and needed for the computation. The use of “” after the name of the file indicates that the file is available locally, in the directory from which the ARC CE commands are executed.

stdout specifies the name of the file where standard output will be redirected.

stderr specifies the name of the file where error messages will be redirected.

gmlog is the name of the directory containing diagnostic tools.

wallTime is the maximum execution time for a job.

runTimeEnvironment indicates the execution environment required by a job.

count is an integer indicating the simultaneous number of executed task (this parameter is greater than 1 in case of MPI jobs).

countpernode is an integer indicating how many tasks should run on a single server. If all tasks need to run on the same server, the value of the count and countpernode attributes should be identical.

An example of the myscript.sh script is shown below:

#!/bin/sh

echo "===== Begin ====="

date

echo "The program is running on $HOSTNAME"

date

dd if=/dev/urandom of=fichier.txt bs=1G count=1

gfal-copy file://`pwd`/fichier.txt root://sbgdcache.in2p3.fr/vo.scigne.fr/lab/name/fichier.txt

echo "===== End ====="

Executables used in the myscript.sh script need to be installed on

the grid. The installation of these software is carried out by the

support team of the SCIGNE platform. Do not hesitate to contact it

for any enquiry.

The gfal-copy command allows to copy files to a SE. It is recommanded

to use the sbgdcache.in2p3.fr SE when you submit jobs at SCIGNE (it

is the closest SE). The usage of the SE is described in the

dedicated guide.

Once the myjob.xrsl and myscript.sh files are created, the

job can be submitted to the ARC CE with the following command:

$ arcsub -c sbgce1.in2p3.fr myjob.xrsl

Job submitted with jobid: gsiftp://sbgce1.in2p3.fr:2811/jobs/VnhGHkl9RUnhJKeJ7oqv78RnGBFKFvDGEDDmxDFKDmABFKDmnKeGDn

The -c option allows to select the ARC CE server.

The identifier of the job is stored in a local database:

{HOME}/.arc/jobs.dat.

Monitoring job status¶

For monitoring the status of a job, the following command can be used with the job identifier:

$ arcstat gsiftp://sbgce1.in2p3.fr:2811/jobs/VnhGHkl9RUnhJKeJ7oqv78RnGBFKFvDGEDDmxDFKDmABFKDmnKeGDn

Job: gsiftp://sbgce1.in2p3.fr:2811/jobs/VnhGHkl9RUnhJKeJ7oqv78RnGBFKFvDGEDDmxDFKDmABFKDmnKeGDn

Name: mysimplejob

State: Finishing

Status of 1 jobs was queried, 1 jobs returned information

In the case of a short job, you may have to wait some time before obtaining an updated status of the job.

Getting the output files¶

Once the job is finished (job status: Finished), the content of the output files, described by the stdout and stderr attributes, can be retrieved with:

$ arcget gsiftp://sbgce1.in2p3.fr:2811/jobs/VnhGHkl9RUnhJKeJ7oqv78RnGBFKFvDGEDDmxDFKDmABFKDmnKeGDn

The file are in the VnhGHkl9RUnhJKeJ7oqv78RnGBFKFvDGEDDmxDFKDmABFKDmnKeGDn

directory.

Supplementary Documentation¶

The reference below points to a documentation that gives a deeper view of the ARC CE client and the computing grid: